Set to launch within a few months, NISAR will use a technique called synthetic aperture radar to produce incredibly detailed maps of surface change on our planet.

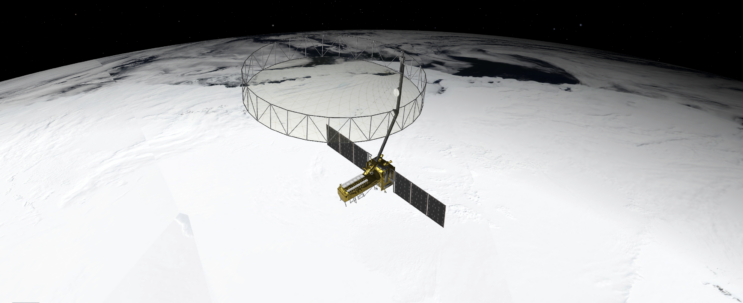

When NASA and the Indian Space Research Organization’s (ISRO) new Earth satellite NISAR (NASA-ISRO Synthetic Aperture Radar) launches in coming months, it will capture images of Earth’s surface so detailed they will show how much small plots of land and ice are moving, down to fractions of an inch. Imaging nearly all of Earth’s solid surfaces twice every 12 days, it will see the flex of Earth’s crust before and after natural disasters such as earthquakes; it will monitor the motion of glaciers and ice sheets; and it will track ecosystem changes, including forest growth and deforestation.

The mission’s extraordinary capabilities come from the technique noted in its name: synthetic aperture radar, or SAR. Pioneered by NASA for use in space, SAR combines multiple measurements, taken as a radar flies overhead, to sharpen the scene below. It works like conventional radar, which uses microwaves to detect distant surfaces and objects, but steps up the data processing to reveal properties and characteristics at high resolution.

To get such detail without SAR, radar satellites would need antennas too enormous to launch, much less operate. At 39 feet (12 meters) wide when deployed, NISAR’s radar antenna reflector is as wide as a city bus is long. Yet it would have to be 12 miles (19 kilometers) in diameter for the mission’s L-band instrument, using traditional radar techniques, to image pixels of Earth down to 30 feet (10 meters) across.

Synthetic aperture radar “allows us to refine things very accurately,” said Charles Elachi, who led NASA spaceborne SAR missions before serving as director of NASA’s Jet Propulsion Laboratory in Southern California from 2001 to 2016. “The NISAR mission will open a whole new realm to learn about our planet as a dynamic system.”

How SAR Works

Elachi arrived at JPL in 1971 after graduating from Caltech, joining a group of engineers developing a radar to study Venus’ surface. Then, as now, radar’s allure was simple: It could collect measurements day and night and see through clouds. The team’s work led to the Magellan mission to Venus in 1989 and several NASA space shuttle radar missions.

An orbiting radar operates on the same principles as one tracking planes at an airport. The spaceborne antenna emits microwave pulses toward Earth. When the pulses hit something — a volcanic cone, for example — they scatter. The antenna receives those signals that echo back to the instrument, which measures their strength, change in frequency, how long they took to return, and if they bounced off of multiple surfaces, such as buildings.

This information can help detect the presence of an object or surface, its distance away, and its speed, but the resolution is too low to generate a clear picture. First conceived at Goodyear Aircraft Corp. in 1952, SAR addresses that issue.

“It’s a technique to create high-resolution images from a low-resolution system,” said Paul Rosen, NISAR’s project scientist at JPL.

As the radar travels, its antenna continuously transmits microwaves and receives echoes from the surface. Because the instrument is moving relative to Earth, there are slight changes in frequency in the return signals. Called the Doppler shift, it’s the same effect that causes a siren’s pitch to rise as a fire engine approaches then fall as it departs.

Computer processing of those signals is like a camera lens redirecting and focusing light to produce a sharp photograph. With SAR, the spacecraft’s path forms the “lens,” and the processing adjusts for the Doppler shifts, allowing the echoes to be aggregated into a single, focused image.

Using SAR

One type of SAR-based visualization is an interferogram, a composite of two images taken at separate times that reveals the differences by measuring the change in the delay of echoes. Though they may look like modern art to the untrained eye, the multicolor concentric bands of interferograms show how far land surfaces have moved: The closer the bands, the greater the motion. Seismologists use these visualizations to measure land deformation from earthquakes.

Another type of SAR analysis, called polarimetry, measures the vertical or horizontal orientation of return waves relative to that of transmitted signals. Waves bouncing off linear structures like buildings tend to return in the same orientation, while those bouncing off irregular features, like tree canopies, return in another orientation. By mapping the differences and the strength of the return signals, researchers can identify an area’s land cover, which is useful for studying deforestation and flooding.

Such analyses are examples of ways NISAR will help researchers better understand processes that affect billions of lives.

“This mission packs in a wide range of science toward a common goal of studying our changing planet and the impacts of natural hazards,” said Deepak Putrevu, co-lead of the ISRO science team at the Space Applications Centre in Ahmedabad, India.

Learn more about NISAR at:

News Media Contacts

Andrew Wang / Jane J. Lee

Jet Propulsion Laboratory, Pasadena, Calif.

626-379-6874 / 818-354-0307

andrew.wang@jpl.nasa.gov / jane.j.lee@jpl.nasa.gov

2025-006

Share

Details

Related Terms

https://www.nasa.gov/missions/nisar/how-new-nasa-india-earth-satellite-nisar-will-see-earth/

Leave a Reply