Knowingly or unknowingly, Microsoft kicked off a race to integrate generative AI into search engines when it introduced Bing AI in February. Google seemingly rushed into an announcement just a day before Microsoft’s launch event, telling the world its generative AI chatbot would be called Bard. Since then, Google has opened up access to its ChatGPT and Bing AI rival, but while Microsoft’s offering has been embedded into its search and browser products, Bard remains a separate chatbot.

That doesn’t mean Google hasn’t been busy with generative AI. It’s infused basically all of its products with the stuff, while leaving Search largely untouched. That is, until now. At its I/O developer conference today, the company unveiled the Search Generative Experience (SGE) as part of the new experimental Search Labs platform. Users can sign up to test new projects and SGE is one of three available at launch.

I checked out a demo of SGE during a briefing with Google’s vice president of Search and, while it has some obvious similarities to Bing AI, there are notable differences as well.

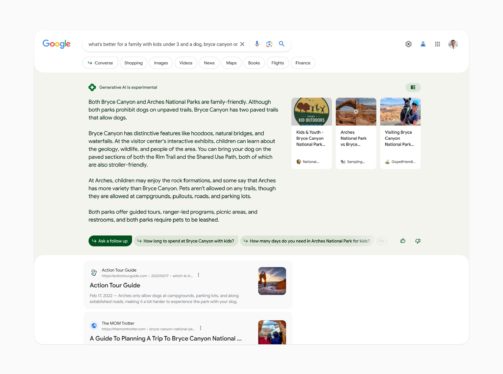

For one thing, SGE doesn’t look too different from your standard Google Search at first glance. The input bar is still the same (whereas Bing’s is larger and more like a compose box on Twitter). But the results page is where I first saw something new. Near the top of the page, just below the search bar but above all other results is a shaded section showing what the generative AI found. Google calls this the AI-powered snapshot containing “key information to consider, with links to dig deeper.”

At the top of this snapshot is a note reminding you that “Generative AI is experimental,” followed by answers to your question that SGE found from multiple sources online. On the top right is a button for you to expand the snapshot, as well as cards that show the articles from which the answers were drawn.

I asked Edwards to help me search for fun things to do in Frankfurt, Germany, as well as the best yoga poses for lower back pain. The typical search results showed up pretty much instantly, though the snapshot area showed a loading animation while the AI compiled its findings. After a few seconds, I saw a list of suggestions for the former, including Palmengarten and Romerburg. But when Edwards clicked the expand button, the snapshot opened up and revealed more questions that SGE thought might be relevant, along with answers. These included “is Frankfurt worth visiting” and “is three days enough to visit Frankfurt,” and the results for each included source articles.

My second question yielded more interesting findings. Not only did SGE show a list of suggested poses, expanding the answers brought up pictures in the source articles that gave a better idea of how to perform each one. Below the list was a suggestion to avoid yoga “if you have certain back problems, such as a spinal fracture or a herniated disc.” Further down in the snapshot, there was also a list of poses to avoid if you have lower back pain.

Importantly, the very bottom of the snapshot included a note saying “This is for informational purposes only. The information does not constitute medical advice or diagnosis.” Edwards said this is one of the safety features built into SGE, where the disclaimer shows up on sensitive topics that could affect a person’s health or financial decisions.

In addition, the snapshot doesn’t appear at all when Google’s algorithms detect that a query has to do with topics like self-harm, domestic violence or mental health crises. What you’ll see in those situations is the standard notice about how and where to get help.

Based on my brief and limited preview, SGE seemed at once similar and different to Bing AI. When citing its sources, for example, SGE doesn’t show inline notations with footnotes linking to each article. Instead, it shows cards on the right or below each section, similar to how the cards on news results look.

Both Google and Microsoft’s layouts offer conversational views, with suggested follow-up prompts at the end of each response. But SGE doesn’t have an input bar at the bottom, and the search bar remains up top, outside of the snapshot. This makes it seem less like talking to a chatbot than Bing AI.

Google didn’t say it set out to build a conversational experience, though. It said “With new generative AI capabilities in Search, we’re now taking more of the work out of searching.” Instead of your having to do multiple searches to get at a specific answer or itinerary or process, you can just bundle your parameters into one query, like “What’s better for a family with kids under 3 and a dog, Bryce Canyon or Arches.”

The good news is that when you use the suggested responses in the snapshot, you can go into a new conversational mode. Here, “context will be carried over from question to question,” according to a press release. You’ll also be able to ask Google for help buying things online, and Edwards said the company sees 1.8 billion updates to its product listings every hour, helping keep information about supply and prices fresh and accurate. And since it’s Google after all, and Google relies heavily on ads to make money, SGE will also feature dedicated ad spaces throughout the page.

Google also said it would remain committed to making sure ads are distinguishable from organic search results. You can sign up to test the new SGE in Search Labs The experiment will be available to all in the coming weeks, starting in the US in English. Look for Labs Icon in the Google App or Chrome desktop and visit labs.google.com/search for more info.

This article originally appeared on Engadget at https://www.engadget.com/google-search-generative-experience-preview-a-familiar-yet-different-approach-175156245.html?src=rss