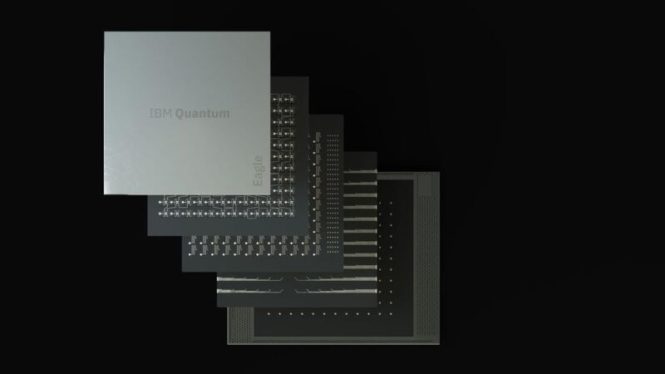

Enlarge / IBM’s Eagle processor has reached Rev3, which means lower noise qubits. (credit: IBM)

Today’s quantum processors are error-prone. While the probabilities are small—less than 1 percent in many cases—each operation we perform on each qubit, including basic things like reading its state, has a significant error rate. If we try an operation that needs a lot of qubits, or a lot of operations on a smaller number of qubits, then errors become inevitable.

Long term, the plan is to solve that using error-corrected qubits. But these will require multiple high-quality qubits for every bit of information, meaning we’ll need thousands of qubits that are better than anything we can currently make. Given that we probably won’t reach that point until the next decade at the earliest, it raises the question of whether quantum computers can do anything interesting in the meantime.

In a publication in today’s Nature, IBM researchers make a strong case for the answer to that being yes. Using a technique termed “error mitigation,” they managed to overcome the problems with today’s qubits and produce an accurate result despite the noise in the system. And they did so in a way that clearly outperformed similar calculations on classical computers.

Read 18 remaining paragraphs | Comments