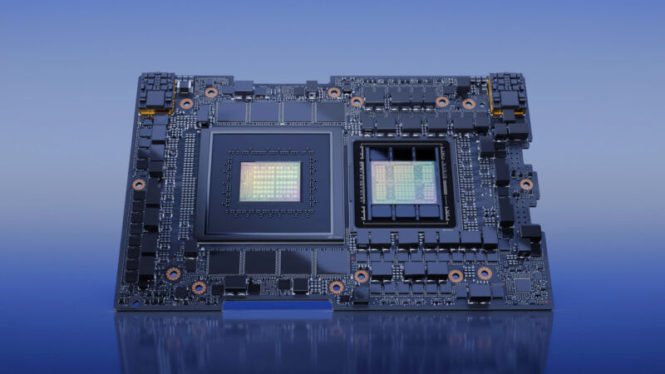

Enlarge / NVIDIA’s GH200 “Grace Hopper” AI superchip. (credit: Nvidia)

Early last week at COMPUTEX, Nvidia announced that its new GH200 Grace Hopper “Superchip”—a combination CPU and GPU specifically created for large-scale AI applications—has entered full production. It’s a beast. It has 528 GPU tensor cores, supports up to 480GB of CPU RAM and 96GB of GPU RAM, and boasts a GPU memory bandwidth of up to 4TB per second.

We’ve previously covered the Nvidia H100 Hopper chip, which is currently Nvidia’s most powerful data center GPU. It powers AI models like OpenAI’s ChatGPT, and it marked a significant upgrade over 2020’s A100 chip, which powered the first round of training runs for many of the news-making generative AI chatbots and image generators we’re talking about today.

Faster GPUs roughly translate into more powerful generative AI models because they can run more matrix multiplications in parallel (and do it faster), which is necessary for today’s artificial neural networks to function.

Read 6 remaining paragraphs | Comments