First, the bad news: it’s really hard to detect AI-generated images. The telltale signs that used to be giveaways — warped hands and jumbled text — are increasingly rare as AI models improve at a dizzying pace.

It’s no longer obvious what images are created using popular tools like Midjourney, Stable Diffusion, DALL-E, and Gemini. In fact, AI-generated images are starting to dupe people even more, which has created major issues in spreading misinformation. The good news is that it’s usually not impossible to identify AI-generated images, but it takes more effort than it used to.

AI image detectors – proceed with caution

These tools use computer vision to examine pixel patterns and determine the likelihood of an image being AI-generated. That means, AI detectors aren’t completely foolproof, but it’s a good way for the average person to determine whether an image merits some scrutiny — especially when it’s not immediately obvious.

“Unfortunately, for the human eye — and there are studies — it’s about a fifty-fifty chance that a person gets it,” said Anatoly Kvitnitsky, CEO of AI image detection platform AI or Not. “But for AI detection for images, due to the pixel-like patterns, those still exist, even as the models continue to get better.” Kvitnitsky claims AI or Not achieves a 98 percent accuracy rate on average.

Other AI detectors that have generally high success rates include Hive Moderation, SDXL Detector on Hugging Face, and Illuminarty. We tested ten AI-generated images on all of these detectors to see how they did.

AI or Not

AI or Not gives a simple “yes” or “no” unlike other AI image detectors, but it correctly said the image was AI-generated. With the free plan, you get 10 uploads a month. We tried with 10 images and got an 80 percent success rate.

Credit: Screenshot: Mashable / AI or Not

Hive Moderation

We tried Hive Moderation’s free demo tool with over 10 different images and got a 90 percent overall success rate, meaning they had a high probability of being AI-generated. However, it failed to detect the AI-qualities of an artificial image of a chipmunk army scaling a rock wall.

Credit: Screenshot: Mashable / Hive Moderation

SDXL Detector

The SDXL Detector on Hugging Face takes a few seconds to load, and you might initially get an error on the first try, but it’s completely free. It also gives a probability percentage instead. It said 70 percent of the AI-generated images had a high probability of being generative AI.

Credit: Screenshot: Mashable / SDXL Detector

Illuminarty

Illuminarty has a free plan that provides basic AI image detection. Out of the 10 AI-generated images we uploaded, it only classified 50 percent as having a very low probability. To the horror of rodent biologists, it gave the infamous rat dick image a low probability of being AI-generated.

Credit: Screenshot: Mashable / Illuminarty

As you can see, AI detectors are mostly pretty good, but not infallible and shouldn’t be used as the only way to authenticate an image. Sometimes, they’re able to detect deceptive AI-generated images even though they look real, and sometimes they get it wrong with images that are clearly AI creations. This is exactly why a combination of methods is best.

Other tips and tricks

The ol’ reverse image search

Another way to detect AI-generated images is the simple reverse image search which is what Bamshad Mobasher, professor of computer science and the director of the Center for Web Intelligence at DePaul University College of Computing and Digital Media in Chicago recommends. By uploading an image to Google Images or a reverse image search tool, you can trace the provenance of the image. If the photo shows an ostensibly real news event, “you may be able to determine that it’s fake or that the actual event didn’t happen,” said Mobasher.

Google’s “About this Image” tool

Google Search also has an “About this Image” feature that provides contextual information like when the image was first indexed, and where else it appeared online. This is found by clicking on the three dots icon in the upper right corner of an image.

Telltale signs that the naked eye can spot

Speaking of which, while AI-generated images are getting scarily good, it’s still worth looking for the telltale signs. As mentioned above, you might still occasionally see an image with warped hands, hair that looks a little too perfect, or text within the image that’s garbled or nonsensical. Our sibling site PCMag’s breakdown recommends looking in the background for blurred or warped objects, or subjects with flawless — and we mean no pores, flawless — skin.

At a first glance, the Midjourney image below looks like a Kardashian relative promoting a cookbook that could easily be from Instagram. But upon further inspection, you can see the contorted sugar jar, warped knuckles, and skin that’s a little too smooth.

Credit: Mashable / Midjourney

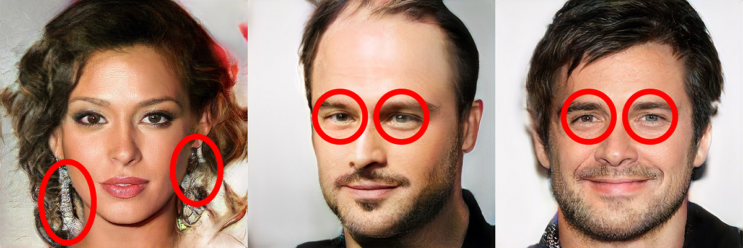

“AI can be good at generating the overall scene, but the devil is in the details,” wrote Sasha Luccioni, AI and climate lead at Hugging Face, in an email to Mashable. Look for “mostly small inconsistencies: extra fingers, asymmetrical jewelry or facial features, incongruities in objects (an extra handle on a teapot).”

Mobasher, who is also a fellow at the Institute of Electrical and Electronics Engineers (IEEE), said to zoom in and look for “odd details” like stray pixels and other inconsistencies, like subtly mismatched earrings.

“You may find part of the same image with the same focus being blurry but another part being super detailed,” Mobasher said. This is especially true in the backgrounds of images. “If you have signs with text and things like that in the backgrounds, a lot of times they end up being garbled or sometimes not even like an actual language,” he added.

This image of a parade of Volkswagen vans parading down a beach was created by Google’s Imagen 3. The sand and busses look flawlessly photorealistic. But look closely, and you’ll notice the lettering on the third bus where the VW logo should be is just a garbled symbol, and there are amorphous splotches on the fourth bus.

Credit: Mashable / Google

Credit: Mashable / Google

It all comes down to AI literacy

None of the above methods will be all that useful if you don’t first pause while consuming media — particularly social media — to wonder if what you’re seeing is AI-generated in the first place. Much like media literacy that became a popular concept around the misinformation-rampant 2016 election, AI literacy is the first line of defense for determining what’s real or not.

AI researchers Duri Long and Brian Magerko’s define AI literacy as “a set of competencies that enables individuals to critically evaluate AI technologies; communicate and collaborate effectively with AI; and use AI as a tool online, at home, and in the workplace.”

Knowing how generative AI works and what to look for is key. “It may sound cliche, but taking the time to verify the provenance and source of the content you see on social media is a good start,” said Luccioni.

Start by asking yourself about the source of the image in question and the context in which it appears. Who published the image? What does the accompanying text (if any) say about it? Have other people or media outlets published the image? How does the image, or the text accompanying it, make you feel? If it seems like it’s designed to enrage or entice you, think about why.

How some organizations are combatting the AI deepfakes and misinformation problem

As we’ve seen, so far the methods by which individuals can discern AI images from real ones are patchy and limited. To make matters worse, the spread of illicit or harmful AI-generated images is a double whammy because the posts circulate falsehoods, which then spawn mistrust in online media. But in the wake of generative AI, several initiatives have sprung up to bolster trust and transparency.

The Coalition for Content Provenance and Authenticity (C2PA) was founded by Adobe and Microsoft, and includes tech companies like OpenAI and Google, as well as media companies like Reuters and the BBC. C2PA provides clickable Content Credentials for identifying the provenance of images and whether they’re AI-generated. However, it’s up to the creators to attach the Content Credentials to an image.

On the flip side, the Starling Lab at Stanford University is working hard to authenticate real images. Starling Lab verifies “sensitive digital records, such as the documentation of human rights violations, war crimes, and testimony of genocide,” and securely stores verified digital images in decentralized networks so they can’t be tampered with. The lab’s work isn’t user-facing, but its library of projects are a good resource for someone looking to authenticate images of, say, the war in Ukraine, or the presidential transition from Donald Trump to Joe Biden.

Experts often talk about AI images in the context of hoaxes and misinformation, but AI imagery isn’t always meant to deceive per se. AI images are sometimes just jokes or memes removed from their original context, or they’re lazy advertising. Or maybe they’re just a form of creative expression with an intriguing new technology. But for better or worse, AI images are a fact of life now. And it’s up to you to detect them.

Credit: Mashable / xAI

https://mashable.com/article/how-to-identify-ai-generated-images

Leave a Reply