Enlarge (credit: imaginima | E+)

After backlash over Google’s search engine becoming the primary traffic source for deepfake porn websites, Google has started burying these links in search results, Bloomberg reported.

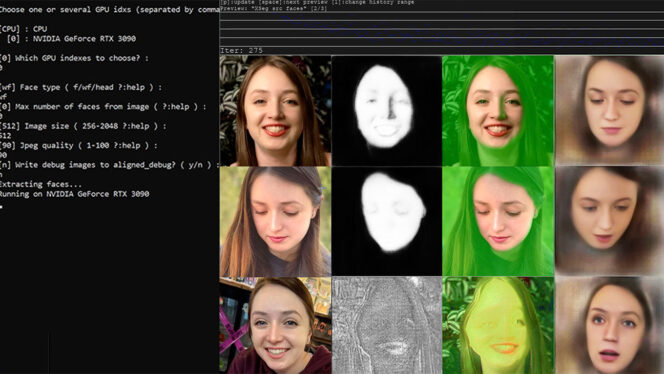

Over the past year, Google has been driving millions to controversial sites distributing AI-generated pornography depicting real people in fake sex videos that were created without their consent, Similarweb found. While anyone can be targeted—police already are bogged down with dealing with a flood of fake AI child sex images—female celebrities are the most common victims. And their fake non-consensual intimate imagery is more easily discoverable on Google by searching just about any famous name with the keyword “deepfake,” Bloomberg noted.

Google refers to this content as “involuntary fake” or “synthetic pornography.” The search engine provides a path for victims to report that content whenever it appears in search results. And when processing these requests, Google also removes duplicates of any flagged deepfakes.

Read 20 remaining paragraphs | Comments