Enlarge / Demis Hassabis, CEO of DeepMind Technologies and developer of AlphaGO, attends the AI Safety Summit at Bletchley Park on November 2, 2023 in Bletchley, England. (credit: Toby Melville – WPA Pool/Getty Images)

A system developed by Google’s DeepMind has set a new record for AI performance on geometry problems. DeepMind’s AlphaGeometry managed to solve 25 of the 30 geometry problems drawn from the International Mathematical Olympiad between 2000 and 2022.

That puts the software ahead of the vast majority of young mathematicians and just shy of IMO gold medalists. DeepMind estimates that the average gold medalist would have solved 26 out of 30 problems. Many view the IMO as the world’s most prestigious math competition for high school students.

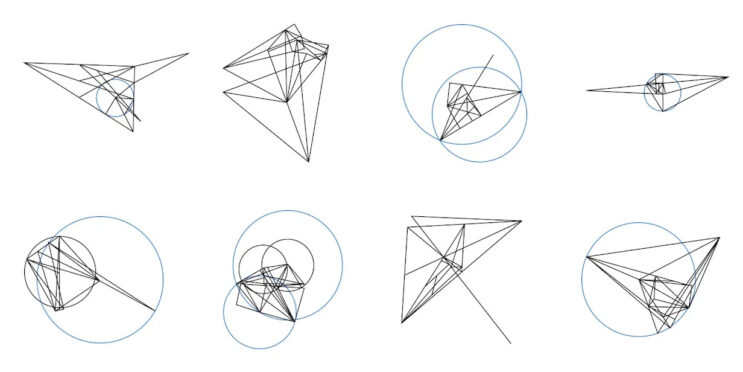

“Because language models excel at identifying general patterns and relationships in data, they can quickly predict potentially useful constructs, but often lack the ability to reason rigorously or explain their decisions,” DeepMind writes. To overcome this difficulty, DeepMind paired a language model with a more traditional symbolic deduction engine that performs algebraic and geometric reasoning.

Read 24 remaining paragraphs | Comments